How to Calculate Value at Risk (VaR) for a Trading Strategy

Understanding how to accurately calculate Value at Risk (VaR) is essential for traders and risk managers aiming to quantify potential losses in their portfolios. This article provides a comprehensive guide on the process, methods, and considerations involved in calculating VaR for trading strategies, ensuring you have the knowledge needed to implement effective risk management practices.

What Is Value at Risk (VaR)?

Value at Risk (VaR) is a statistical measure that estimates the maximum expected loss of a portfolio over a specified time horizon with a given confidence level. For example, if your portfolio has a 1-day VaR of $1 million at 95% confidence, it implies there’s only a 5% chance that losses will exceed this amount within one day. Traders use VaR as an essential tool to understand potential downside risks and allocate capital accordingly.

Why Is Calculating VaR Important in Trading?

In trading environments, where market volatility can be unpredictable, quantifying potential losses helps traders make informed decisions about position sizing and risk exposure. Accurate VaR calculations enable traders to set stop-loss levels, determine appropriate leverage limits, and comply with regulatory requirements such as Basel Accords. Moreover, understanding the limitations of VaR ensures that traders do not rely solely on this metric but incorporate additional risk measures like Expected Shortfall or stress testing.

Key Steps in Calculating VaR for Your Trading Strategy

Calculating VaR involves several systematic steps designed to analyze historical data or simulate future scenarios:

1. Define Your Time Horizon

The first step is selecting an appropriate time frame over which you want to estimate potential losses—commonly one day for intraday trading or longer periods like one month depending on your strategy. The choice depends on your trading frequency and investment horizon; shorter horizons are typical for active traders while longer ones suit institutional investors.

2. Select Confidence Level

Next is choosing the confidence level—usually set at 95% or 99%. This percentage indicates how confident you are that actual losses will not exceed your calculated VaR during the specified period. Higher confidence levels provide more conservative estimates but may also lead to larger capital reserves being set aside.

3. Gather Historical Data

Historical data forms the backbone of most VaR calculations. You need sufficient past price movements or returns relevant to your assets or portfolio components—such as stocks, commodities, currencies—to model future risks accurately.

4. Estimate Return Distribution

Using historical data points collected over your chosen period—for example: daily returns over six months—you estimate how asset prices have historically behaved by modeling their return distribution. This can involve calculating mean returns and standard deviations if assuming normality or fitting other distributions based on empirical data.

5. Calculate Portfolio Returns

For portfolios containing multiple assets with different weights, compute combined returns considering correlations among assets:

- Weighted Returns: Multiply each asset's return by its proportion in the portfolio.

- Covariance Matrix: Use historical covariance between assets' returns for more precise modeling.This step ensures you capture diversification effects when estimating overall portfolio risk.

6. Determine Quantiles Based on Distribution

Depending on your chosen method:

- For Historical VaR, identify percentile thresholds directly from historical return data.

- For Parametric Methods, calculate quantiles assuming specific distributions like normal distribution.

- For Monte Carlo Simulation, generate numerous simulated paths based on estimated parameters (mean/variance/covariance), then analyze these simulations’ outcomes.

7. Compute Final VaR Estimate

Finally:

- In Historical methods: select the loss value corresponding to your confidence percentile.

- In Parametric approaches: use statistical formulas involving mean return minus z-score times standard deviation.

- In Monte Carlo simulations: determine percentile loss across all simulated outcomes.This result represents your estimated maximum expected loss within the defined parameters.

Common Methods Used in Calculating VaRs

Different techniques exist depending upon complexity needs and available data:

Historical Simulation:

Uses actual past market movements without assuming any specific distribution; straightforward but relies heavily on recent history which may not predict future extremes effectively.

Parametric Method:

Assumes asset returns follow known distributions such as normal distribution; computationally simple but may underestimate tail risks during volatile periods when assumptions break down.

Monte Carlo Simulation:

Generates thousands of possible future scenarios based on stochastic models; highly flexible allowing incorporation of complex features like non-normality but computationally intensive requiring robust models and high-quality input data.

Considerations When Applying These Methods

While calculating VaRs provides valuable insights into potential risks faced by trading strategies, it’s crucial also recognize its limitations:

Model Assumptions: Many methods assume stable market conditions which might not hold during crises leading to underestimation of extreme events.

Data Quality: Reliable historic price data is vital; missing information can distort results significantly.

Time Horizon & Confidence Level: Longer horizons increase uncertainty; higher confidence levels produce more conservative estimates but require larger capital buffers.

By understanding these factors upfront—and supplementing quantitative analysis with qualitative judgment—you enhance overall risk management robustness.

Incorporating Stress Testing & Complementary Measures

Given some limitations inherent in traditional VAR models—especially during extraordinary market events—it’s advisable also employ stress testing alongside VAR calculations:

- Simulate extreme scenarios beyond historical experience

- Assess impact under hypothetical shocks

- Combine results with other metrics such as Expected Shortfall

These practices help ensure comprehensive coverage against unforeseen risks affecting trading positions.

Practical Tips for Traders Using Variance-Based Models

To optimize VA R calculation accuracy:

– Regularly update input data reflecting current market conditions

– Adjust model parameters when significant shifts occur

– Use multiple methods concurrently—for instance combining Historical simulation with Monte Carlo approaches

– Maintain awareness of model assumptions versus real-world dynamics

Implementing these best practices enhances decision-making precision while aligning with regulatory standards.

How Regulatory Frameworks Influence Your Calculation Approach

Regulatory bodies like Basel Accords mandate financial institutions maintain sufficient capital reserves based partly upon their calculated VA R figures—a process emphasizing transparency and robustness in measurement techniques:

– Ensure compliance through documented methodologies – Validate models periodically – Incorporate stress testing results into overall risk assessments

Adhering strictly helps avoid penalties while fostering trust among stakeholders.

Calculating Value at Risk effectively requires understanding both statistical techniques and practical considerations unique to each trading strategy's context — including asset types involved , time horizons ,and desired confidence levels . By following structured steps—from gathering reliable historic data through sophisticated simulation—and recognizing inherent limitations,you can develop robust measures that support prudent decision-making amid volatile markets . Remember always complement quantitative analysis with qualitative judgment,and stay updated regarding evolving best practices within financial risk management frameworks .

kai

2025-05-09 22:08

How do you calculate Value at Risk (VaR) for a trading strategy?

How to Calculate Value at Risk (VaR) for a Trading Strategy

Understanding how to accurately calculate Value at Risk (VaR) is essential for traders and risk managers aiming to quantify potential losses in their portfolios. This article provides a comprehensive guide on the process, methods, and considerations involved in calculating VaR for trading strategies, ensuring you have the knowledge needed to implement effective risk management practices.

What Is Value at Risk (VaR)?

Value at Risk (VaR) is a statistical measure that estimates the maximum expected loss of a portfolio over a specified time horizon with a given confidence level. For example, if your portfolio has a 1-day VaR of $1 million at 95% confidence, it implies there’s only a 5% chance that losses will exceed this amount within one day. Traders use VaR as an essential tool to understand potential downside risks and allocate capital accordingly.

Why Is Calculating VaR Important in Trading?

In trading environments, where market volatility can be unpredictable, quantifying potential losses helps traders make informed decisions about position sizing and risk exposure. Accurate VaR calculations enable traders to set stop-loss levels, determine appropriate leverage limits, and comply with regulatory requirements such as Basel Accords. Moreover, understanding the limitations of VaR ensures that traders do not rely solely on this metric but incorporate additional risk measures like Expected Shortfall or stress testing.

Key Steps in Calculating VaR for Your Trading Strategy

Calculating VaR involves several systematic steps designed to analyze historical data or simulate future scenarios:

1. Define Your Time Horizon

The first step is selecting an appropriate time frame over which you want to estimate potential losses—commonly one day for intraday trading or longer periods like one month depending on your strategy. The choice depends on your trading frequency and investment horizon; shorter horizons are typical for active traders while longer ones suit institutional investors.

2. Select Confidence Level

Next is choosing the confidence level—usually set at 95% or 99%. This percentage indicates how confident you are that actual losses will not exceed your calculated VaR during the specified period. Higher confidence levels provide more conservative estimates but may also lead to larger capital reserves being set aside.

3. Gather Historical Data

Historical data forms the backbone of most VaR calculations. You need sufficient past price movements or returns relevant to your assets or portfolio components—such as stocks, commodities, currencies—to model future risks accurately.

4. Estimate Return Distribution

Using historical data points collected over your chosen period—for example: daily returns over six months—you estimate how asset prices have historically behaved by modeling their return distribution. This can involve calculating mean returns and standard deviations if assuming normality or fitting other distributions based on empirical data.

5. Calculate Portfolio Returns

For portfolios containing multiple assets with different weights, compute combined returns considering correlations among assets:

- Weighted Returns: Multiply each asset's return by its proportion in the portfolio.

- Covariance Matrix: Use historical covariance between assets' returns for more precise modeling.This step ensures you capture diversification effects when estimating overall portfolio risk.

6. Determine Quantiles Based on Distribution

Depending on your chosen method:

- For Historical VaR, identify percentile thresholds directly from historical return data.

- For Parametric Methods, calculate quantiles assuming specific distributions like normal distribution.

- For Monte Carlo Simulation, generate numerous simulated paths based on estimated parameters (mean/variance/covariance), then analyze these simulations’ outcomes.

7. Compute Final VaR Estimate

Finally:

- In Historical methods: select the loss value corresponding to your confidence percentile.

- In Parametric approaches: use statistical formulas involving mean return minus z-score times standard deviation.

- In Monte Carlo simulations: determine percentile loss across all simulated outcomes.This result represents your estimated maximum expected loss within the defined parameters.

Common Methods Used in Calculating VaRs

Different techniques exist depending upon complexity needs and available data:

Historical Simulation:

Uses actual past market movements without assuming any specific distribution; straightforward but relies heavily on recent history which may not predict future extremes effectively.

Parametric Method:

Assumes asset returns follow known distributions such as normal distribution; computationally simple but may underestimate tail risks during volatile periods when assumptions break down.

Monte Carlo Simulation:

Generates thousands of possible future scenarios based on stochastic models; highly flexible allowing incorporation of complex features like non-normality but computationally intensive requiring robust models and high-quality input data.

Considerations When Applying These Methods

While calculating VaRs provides valuable insights into potential risks faced by trading strategies, it’s crucial also recognize its limitations:

Model Assumptions: Many methods assume stable market conditions which might not hold during crises leading to underestimation of extreme events.

Data Quality: Reliable historic price data is vital; missing information can distort results significantly.

Time Horizon & Confidence Level: Longer horizons increase uncertainty; higher confidence levels produce more conservative estimates but require larger capital buffers.

By understanding these factors upfront—and supplementing quantitative analysis with qualitative judgment—you enhance overall risk management robustness.

Incorporating Stress Testing & Complementary Measures

Given some limitations inherent in traditional VAR models—especially during extraordinary market events—it’s advisable also employ stress testing alongside VAR calculations:

- Simulate extreme scenarios beyond historical experience

- Assess impact under hypothetical shocks

- Combine results with other metrics such as Expected Shortfall

These practices help ensure comprehensive coverage against unforeseen risks affecting trading positions.

Practical Tips for Traders Using Variance-Based Models

To optimize VA R calculation accuracy:

– Regularly update input data reflecting current market conditions

– Adjust model parameters when significant shifts occur

– Use multiple methods concurrently—for instance combining Historical simulation with Monte Carlo approaches

– Maintain awareness of model assumptions versus real-world dynamics

Implementing these best practices enhances decision-making precision while aligning with regulatory standards.

How Regulatory Frameworks Influence Your Calculation Approach

Regulatory bodies like Basel Accords mandate financial institutions maintain sufficient capital reserves based partly upon their calculated VA R figures—a process emphasizing transparency and robustness in measurement techniques:

– Ensure compliance through documented methodologies – Validate models periodically – Incorporate stress testing results into overall risk assessments

Adhering strictly helps avoid penalties while fostering trust among stakeholders.

Calculating Value at Risk effectively requires understanding both statistical techniques and practical considerations unique to each trading strategy's context — including asset types involved , time horizons ,and desired confidence levels . By following structured steps—from gathering reliable historic data through sophisticated simulation—and recognizing inherent limitations,you can develop robust measures that support prudent decision-making amid volatile markets . Remember always complement quantitative analysis with qualitative judgment,and stay updated regarding evolving best practices within financial risk management frameworks .

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

Subzero Labs completed a $20 million seed round led by Pantera Capital to build Rialo—the first full-stack blockchain network for real-world applications, bridging Web2 usability with Web3 capabilities. Here are the project's core highlights:

💰 Project Features:

-

Native Real-World Connectivity: Direct integration with web services, no complex middleware needed

Event-Driven Transactions: Automatic execution based on real-world triggers like package delivery, weather insurance

Real-World Data Access: Built-in FICO scores, weather data, market information, and other off-chain data

RISC-V & Solana VM Compatibility: Developers can reuse existing tools and code

🎯 Technical Advantages: 1️⃣ Invisible Infrastructure: Simplified development workflow, letting developers focus on product innovation 2️⃣ Privacy Protection: Suitable for regulated industries like healthcare and finance 3️⃣ Cross-Platform Compatibility: Seamlessly integrate existing systems with blockchain technology 4️⃣ Developer-Centric: Eliminates complex oracle, cross-chain bridge integrations

🏆 Funding Lineup:

-

Lead: Pantera Capital

Participants: Coinbase Ventures, Hashed, Susquehanna Crypto, Mysten Labs, Fabric Ventures, etc.

Investor Quote: "The only full-stack network for real-world applications"

💡 Team Background:

-

Founders: Ade Adepoju (former Netflix distributed systems, AMD microchips) & Lu Zhang (former Meta Diem project, large-scale AI/ML infrastructure)

Team from: Meta, Apple, Amazon, Netflix, Google, Solana, Coinbase, and other top-tier companies

Early engineers from Mysten Labs who contributed to Sui network development

🔐 Use Cases:

-

Asset Tokenization: Real estate, commodities, private equity

Prediction Markets: Event-driven automatic settlement

Global Trading: Cross-border payment and settlement systems

AI Agent Orchestration & Supply Chain Management

IoT-integrated tracking and verification

🌟 Market Positioning:

-

Solving Developer Retention Crisis: Simplifying blockchain development complexity

Serving trillion-dollar real-world asset tokenization market

Different from throughput-focused Layer-1s, emphasizing practicality and real applications

📱 Development Progress:

-

Private devnet now live

Early development partner testing underway

Public launch timeline in preparation (specific dates TBD)

Developers can join waitlist for early access

🔮 Core Philosophy: "Rialo isn't a Layer 1"—By making blockchain infrastructure "invisible," developers can build truly real-world connected decentralized applications, rather than just pursuing transaction speed metrics.

Rialo, with strong funding support, top-tier team background, and revolutionary technical architecture, is positioned to drive blockchain technology's transition from experimental protocols to production-ready applications as key infrastructure.

Read the complete technical architecture analysis: 👇 https://blog.jucoin.com/rialo-blockchain-guide/?utm_source=blog

#Rialo #SubzeroLabs #Blockchain #RealWorldAssets

JU Blog

2025-08-05 10:30

🚀 Rialo Blockchain: $20M Seed Round Building Revolutionary Web3 Infrastructure!

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

3 Days Left! The JuCoin Million Airdrop is going wild! 🎉

👉 RT and lock in your Hashrate airdrop:

✅ KYC

✅ Activate JU Node

🎰 100% win rate Lucky Wheel — win up to 1,000U in a single spin 👉 https://bit.ly/4eDheON

#Jucoin #JucoinVietnam #Airdrop

Lee Jucoin

2025-08-14 03:26

The JuCoin Million Airdrop

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

🔍About #Xpayra:

🔹Xpayra is a next-generation crypto-financial infrastructure that combines PayFi concepts with Web3 technology, committed to reshaping diversified on-chain financial services such as stablecoin settlement, virtual card payments, and decentralized lending.

🔹The project adopts a modular smart contract framework, zero-knowledge technology, and a high-performance asset aggregation engine to achieve secure interoperability and aggregation of funds, data, and rights across multiple chains.

👉 More Detail:https://bit.ly/45efKr5

#Jucoin #JucoinVietnam#Xpayra #Airdrop

Lee Jucoin

2025-08-14 03:24

📣Xpayra Officially Joins the JuCoin Ecosystem

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

What Is the Engle-Granger Two-Step Method for Cointegration Analysis?

The Engle-Granger two-step method is a foundational statistical approach used in econometrics to identify and analyze long-term relationships between non-stationary time series data. This technique helps economists, financial analysts, and policymakers understand whether variables such as interest rates, exchange rates, or commodity prices move together over time in a stable manner. Recognizing these relationships is essential for making informed decisions based on economic theories and market behaviors.

Understanding Cointegration in Time Series Data

Before diving into the specifics of the Engle-Granger method, it’s important to grasp what cointegration entails. In simple terms, cointegration occurs when two or more non-stationary time series are linked by a long-term equilibrium relationship. Although each individual series may exhibit trends or cycles—making them non-stationary—their linear combination results in a stationary process that fluctuates around a constant mean.

For example, consider the prices of two related commodities like oil and gasoline. While their individual prices might trend upward over years due to inflation or market dynamics, their price difference could remain relatively stable if they are economically linked. Detecting such relationships allows analysts to model these variables more accurately and forecast future movements effectively.

The Two Main Steps of the Engle-Granger Method

The Engle-Granger approach simplifies cointegration testing into two sequential steps:

Step 1: Testing for Unit Roots (Stationarity) in Individual Series

Initially, each time series under consideration must be tested for stationarity using unit root tests such as the Augmented Dickey-Fuller (ADF) test. Non-stationary data typically show persistent trends or cycles that violate many classical statistical assumptions.

If both series are found to be non-stationary—meaning they possess unit roots—the next step involves examining whether they share a cointegrated relationship. Conversely, if either series is stationary from the outset, traditional regression analysis might suffice without further cointegration testing.

Step 2: Estimating Long-Run Relationship and Testing Residuals

Once confirmed that both variables are integrated of order one (I(1)), meaning they become stationary after differencing once, researchers regress one variable on another using ordinary least squares (OLS). This regression produces residuals representing deviations from this estimated long-term equilibrium relationship.

The critical part here is testing whether these residuals are stationary through another ADF test or similar methods. If residuals turn out to be stationary—that is they fluctuate around zero without trending—then it indicates that the original variables are indeed cointegrated; they move together over time despite being individually non-stationary.

Significance of Cointegration Analysis

Identifying cointegrated relationships has profound implications across economics and finance:

- Long-Term Forecasting: Recognizing stable relationships enables better prediction models.

- Policy Formulation: Governments can design policies knowing certain economic indicators tend to move together.

- Risk Management: Investors can hedge positions based on predictable co-movements between assets.

For instance, if exchange rates and interest rates are found to be cointegrated within an economy's context, monetary authorities might adjust policies with confidence about their long-term effects on currency stability.

Limitations and Critiques of the Engle-Granger Method

Despite its widespread use since its inception in 1987 by Clive Granger and Robert Engle—a Nobel laureate—the method does have notable limitations:

Linearity Assumption: It presumes linear relationships between variables; real-world economic interactions often involve nonlinearities.

Sensitivity to Outliers: Extreme values can distort regression estimates leading to incorrect conclusions about stationarity.

Single Cointegrating Vector: The method tests only for one possible long-run relationship at a time; complex systems with multiple equilibria require more advanced techniques like Johansen’s test.

Structural Breaks Impact: Changes such as policy shifts or economic crises can break existing relationships temporarily or permanently but may not be detected properly by this approach unless explicitly modeled.

Understanding these limitations ensures users interpret results cautiously while considering supplementary analyses where necessary.

Recent Developments Enhancing Cointegration Testing

Since its introduction during the late 20th century, researchers have developed advanced tools building upon or complementing the Engle-Granger framework:

Johansen Test: An extension capable of identifying multiple co-integrating vectors simultaneously within multivariate systems.

Vector Error Correction Models (VECM): These models incorporate short-term dynamics while maintaining insights into long-term equilibrium relations identified through cointegration analysis.

These developments improve robustness especially when analyzing complex datasets involving several interconnected economic indicators simultaneously—a common scenario in modern econometrics research.

Practical Applications Across Economics & Finance

Economists frequently employ engel-granger-based analyses when exploring topics like:

- Long-run purchasing power parity between currencies

- Relationship between stock indices across markets

- Linkages between macroeconomic indicators like GDP growth rate versus inflation

Financial institutions also utilize this methodology for arbitrage strategies where understanding asset price co-movements enhances investment decisions while managing risks effectively.

Summary Table: Key Aspects of Engel–Granger Two-Step Method

| Aspect | Description |

|---|---|

| Purpose | Detects stable long-term relations among non-stationary variables |

| Main Components | Unit root testing + residual stationarity testing |

| Data Requirements | Variables should be integrated of order one (I(1)) |

| Limitations | Assumes linearity; sensitive to outliers & structural breaks |

By applying this structured approach thoughtfully—and recognizing its strengths alongside limitations—researchers gain valuable insights into how different economic factors interact over extended periods.

In essence, understanding how economies evolve requires tools capable of capturing enduring linkages amidst volatile short-term fluctuations. The Engle-Granger two-step method remains an essential component within this analytical toolkit—helping decode complex temporal interdependencies fundamental for sound econometric modeling and policy formulation.

JCUSER-IC8sJL1q

2025-05-09 22:52

What is the Engle-Granger two-step method for cointegration analysis?

What Is the Engle-Granger Two-Step Method for Cointegration Analysis?

The Engle-Granger two-step method is a foundational statistical approach used in econometrics to identify and analyze long-term relationships between non-stationary time series data. This technique helps economists, financial analysts, and policymakers understand whether variables such as interest rates, exchange rates, or commodity prices move together over time in a stable manner. Recognizing these relationships is essential for making informed decisions based on economic theories and market behaviors.

Understanding Cointegration in Time Series Data

Before diving into the specifics of the Engle-Granger method, it’s important to grasp what cointegration entails. In simple terms, cointegration occurs when two or more non-stationary time series are linked by a long-term equilibrium relationship. Although each individual series may exhibit trends or cycles—making them non-stationary—their linear combination results in a stationary process that fluctuates around a constant mean.

For example, consider the prices of two related commodities like oil and gasoline. While their individual prices might trend upward over years due to inflation or market dynamics, their price difference could remain relatively stable if they are economically linked. Detecting such relationships allows analysts to model these variables more accurately and forecast future movements effectively.

The Two Main Steps of the Engle-Granger Method

The Engle-Granger approach simplifies cointegration testing into two sequential steps:

Step 1: Testing for Unit Roots (Stationarity) in Individual Series

Initially, each time series under consideration must be tested for stationarity using unit root tests such as the Augmented Dickey-Fuller (ADF) test. Non-stationary data typically show persistent trends or cycles that violate many classical statistical assumptions.

If both series are found to be non-stationary—meaning they possess unit roots—the next step involves examining whether they share a cointegrated relationship. Conversely, if either series is stationary from the outset, traditional regression analysis might suffice without further cointegration testing.

Step 2: Estimating Long-Run Relationship and Testing Residuals

Once confirmed that both variables are integrated of order one (I(1)), meaning they become stationary after differencing once, researchers regress one variable on another using ordinary least squares (OLS). This regression produces residuals representing deviations from this estimated long-term equilibrium relationship.

The critical part here is testing whether these residuals are stationary through another ADF test or similar methods. If residuals turn out to be stationary—that is they fluctuate around zero without trending—then it indicates that the original variables are indeed cointegrated; they move together over time despite being individually non-stationary.

Significance of Cointegration Analysis

Identifying cointegrated relationships has profound implications across economics and finance:

- Long-Term Forecasting: Recognizing stable relationships enables better prediction models.

- Policy Formulation: Governments can design policies knowing certain economic indicators tend to move together.

- Risk Management: Investors can hedge positions based on predictable co-movements between assets.

For instance, if exchange rates and interest rates are found to be cointegrated within an economy's context, monetary authorities might adjust policies with confidence about their long-term effects on currency stability.

Limitations and Critiques of the Engle-Granger Method

Despite its widespread use since its inception in 1987 by Clive Granger and Robert Engle—a Nobel laureate—the method does have notable limitations:

Linearity Assumption: It presumes linear relationships between variables; real-world economic interactions often involve nonlinearities.

Sensitivity to Outliers: Extreme values can distort regression estimates leading to incorrect conclusions about stationarity.

Single Cointegrating Vector: The method tests only for one possible long-run relationship at a time; complex systems with multiple equilibria require more advanced techniques like Johansen’s test.

Structural Breaks Impact: Changes such as policy shifts or economic crises can break existing relationships temporarily or permanently but may not be detected properly by this approach unless explicitly modeled.

Understanding these limitations ensures users interpret results cautiously while considering supplementary analyses where necessary.

Recent Developments Enhancing Cointegration Testing

Since its introduction during the late 20th century, researchers have developed advanced tools building upon or complementing the Engle-Granger framework:

Johansen Test: An extension capable of identifying multiple co-integrating vectors simultaneously within multivariate systems.

Vector Error Correction Models (VECM): These models incorporate short-term dynamics while maintaining insights into long-term equilibrium relations identified through cointegration analysis.

These developments improve robustness especially when analyzing complex datasets involving several interconnected economic indicators simultaneously—a common scenario in modern econometrics research.

Practical Applications Across Economics & Finance

Economists frequently employ engel-granger-based analyses when exploring topics like:

- Long-run purchasing power parity between currencies

- Relationship between stock indices across markets

- Linkages between macroeconomic indicators like GDP growth rate versus inflation

Financial institutions also utilize this methodology for arbitrage strategies where understanding asset price co-movements enhances investment decisions while managing risks effectively.

Summary Table: Key Aspects of Engel–Granger Two-Step Method

| Aspect | Description |

|---|---|

| Purpose | Detects stable long-term relations among non-stationary variables |

| Main Components | Unit root testing + residual stationarity testing |

| Data Requirements | Variables should be integrated of order one (I(1)) |

| Limitations | Assumes linearity; sensitive to outliers & structural breaks |

By applying this structured approach thoughtfully—and recognizing its strengths alongside limitations—researchers gain valuable insights into how different economic factors interact over extended periods.

In essence, understanding how economies evolve requires tools capable of capturing enduring linkages amidst volatile short-term fluctuations. The Engle-Granger two-step method remains an essential component within this analytical toolkit—helping decode complex temporal interdependencies fundamental for sound econometric modeling and policy formulation.

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

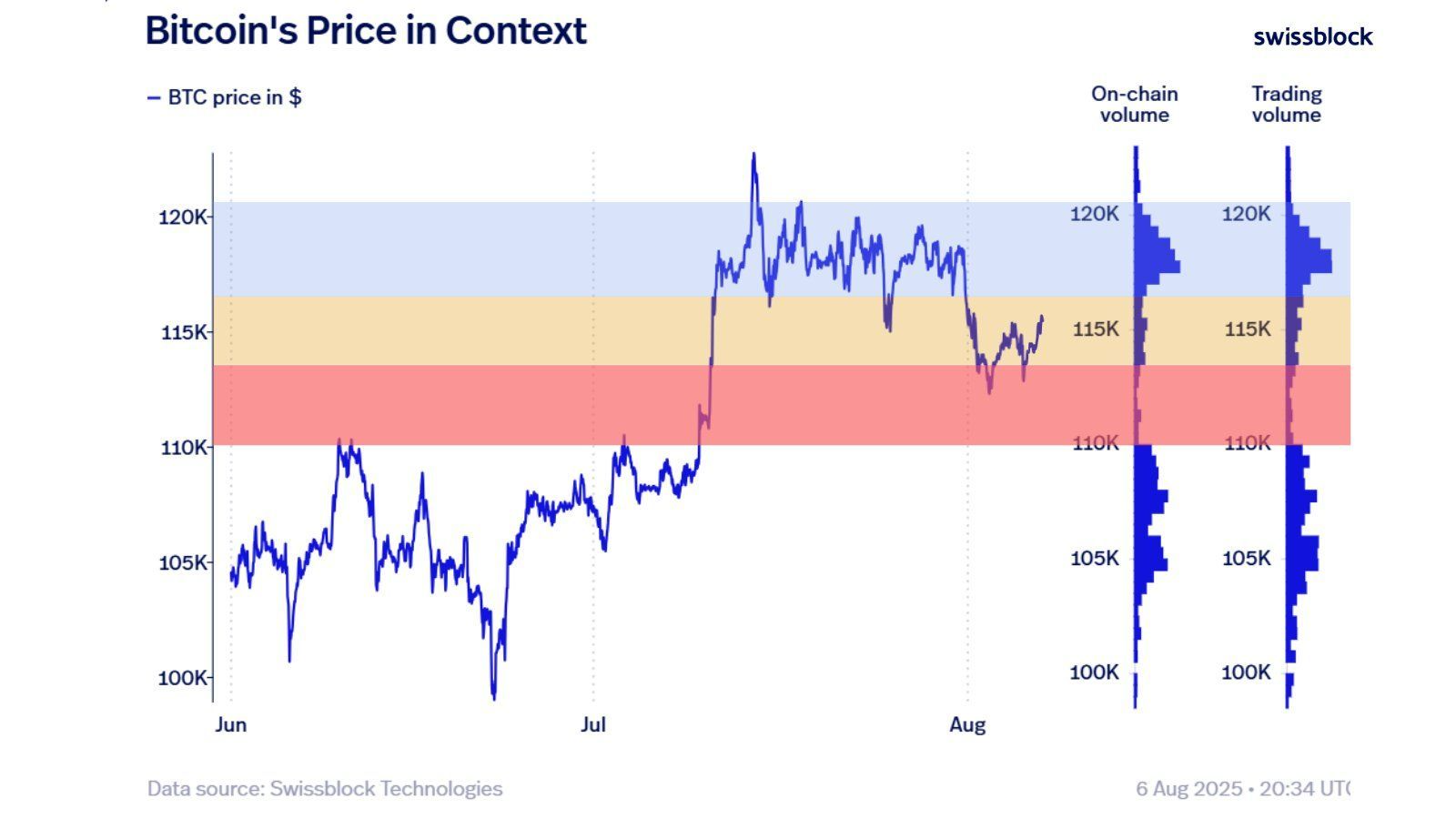

Mais sous cette surface calme, les zones se dessinent…

🟥 Zone rouge : le support a tenu, mais reste un terrain miné

🟨 Zone jaune : entre neutralité et pivot psychologique

🟦 Zone bleue: un champ de bataille à fort volume. Et si le $BTC/USDT l’atteint… cela pourrait enclencher un nouveau momentum.

🔍 Ce type de configuration est souvent le théâtre de mouvements violents — et imprévisibles.

🧠 Ce n’est pas le moment de FOMO, mais d’observer... stratégiquement.

💬 Tu es team breakout ou team range ? Dis-moi ce que tu vois dans le graphe.

#BTC #PriceAction #CryptoMarket

Carmelita

2025-08-07 14:10

🎯 Le marché du Bitcoin est en mode "stand-by" — pas de cassure haussière, pas d’effondrement non pl

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

How to Protect Your Crypto Assets from Scams

Cryptocurrency investments have surged in popularity, but with this growth comes an increased risk of scams and security breaches. Whether you're a seasoned trader or just starting out, understanding how to safeguard your digital assets is essential. This guide covers the most effective strategies to protect your crypto holdings from common threats like phishing, fraud, and hacking.

Understanding Common Cryptocurrency Scams

Crypto scams come in various forms, often targeting individuals who are less familiar with digital security practices. Phishing remains one of the most prevalent tactics—fraudulent emails or messages impersonate legitimate exchanges or service providers to steal private keys or login credentials. Ponzi schemes promise high returns but collapse once new investors stop joining. Fake exchanges lure users into depositing funds that are never recovered, while social engineering attacks manipulate individuals into revealing sensitive information.

Recent incidents highlight these risks: for example, a widespread toll road scam via text messages has been circulating across the U.S., emphasizing how scammers exploit public trust and fear. Additionally, ransomware attacks on organizations like PowerSchool demonstrate ongoing extortion threats that can impact both institutions and individual users.

Use Secure Wallets for Storing Crypto Assets

A critical step in safeguarding your cryptocurrencies is choosing secure wallets designed specifically for crypto storage. Hardware wallets such as Ledger Nano S/X and Trezor offer cold storage solutions—meaning they are offline and immune to online hacking attempts—which significantly reduces vulnerability compared to hot wallets connected directly to the internet.

Multi-signature wallets add an extra layer of security by requiring multiple approvals before any transaction can be executed. This setup prevents unauthorized transfers even if one device or key is compromised. Always opt for reputable wallet providers with strong security track records rather than unverified options promising quick gains.

Enable Two-Factor Authentication (2FA)

Adding two-factor authentication (2FA) on all accounts related to cryptocurrency activities dramatically enhances account security. 2FA requires a second verification step—such as a code sent via SMS or generated through an authenticator app like Google Authenticator—to access your exchange accounts or wallets.

This measure ensures that even if someone obtains your password through phishing or data breaches, they cannot access your assets without the second factor—a crucial safeguard given recent data breaches at platforms like Coinbase exposed user information but did not necessarily compromise assets directly when 2FA was enabled.

Be Vigilant Against Phishing Attempts

Phishing remains one of the leading causes of asset theft in crypto markets today. Always verify URLs before entering login details; scammers often create fake websites resembling legitimate exchanges such as Binance or Coinbase to trick users into revealing private keys or passwords.

Avoid clicking links from unsolicited emails or messages claiming urgent issues with your account unless you confirm their authenticity through official channels. Remember: reputable services will never ask you for sensitive information via email nor request private keys under any circumstances.

Keep Software Up-to-Date

Cybercriminals frequently exploit vulnerabilities in outdated software systems—be it operating systems, browsers, or wallet applications—to gain unauthorized access to devices containing crypto assets. Regularly updating all software ensures you benefit from patches fixing known security flaws.

Set automatic updates where possible and avoid downloading files from untrusted sources. Using up-to-date antivirus programs adds another layer of defense against malware designed explicitly for stealing cryptocurrencies stored on infected devices.

Monitor Accounts Regularly

Active monitoring helps detect suspicious activity early before significant damage occurs. Many exchanges provide alert features—for example, notifications about large transactions—that enable prompt responses if something unusual happens within your account history.

Periodically review transaction histories across all platforms linked with your holdings; unfamiliar transfers should trigger immediate investigation and potential reporting to authorities if necessary.

Educate Yourself About Security Best Practices

Staying informed about emerging scams and evolving cybersecurity techniques empowers you against potential threats effectively reducing vulnerability exposure over time.Follow trusted industry sources such as official exchange blogs, cybersecurity news outlets specializing in blockchain technology updates—and participate in community forums where experienced traders share insights.Understanding concepts like seed phrases recovery methods further enhances resilience against hardware failures while maintaining control over private keys securely stored offline.

Choose Reputable Cryptocurrency Exchanges

Not all trading platforms are created equal; some may lack robust security measures making them attractive targets for hackers.Prioritize well-established exchanges known for strong regulatory compliance standards—including multi-layered security protocols—and transparent operational histories.Avoid new entrants without verifiable credentials who might be more susceptible targets due to weaker defenses.

Diversify Your Investments

Spreading investments across multiple cryptocurrencies reduces overall risk exposure associated with individual token volatility—or targeted scams aimed at specific coins.Implementing diversification strategies also minimizes potential losses should one asset become compromised due to unforeseen vulnerabilities.

Use Advanced Security Tools

Beyond basic protections like 2FA and secure wallets — consider deploying additional tools:

- Anti-virus software capable of detecting malware targeting cryptocurrency files

- Virtual Private Networks (VPNs) ensuring encrypted online activity

- Hardware-based biometric authentication methods where available

These measures collectively strengthen defenses against cyberattacks aiming at stealing digital assets

Report Suspicious Activity Promptly

If you encounter suspicious emails, links claiming false promotions, unexpected transfer requests—or notice irregularities within accounts—report immediately:

- Contact customer support through verified channels

- Notify relevant authorities such as local cybercrime unitsSharing experiences within trusted communities helps others recognize similar threats early

Staying Ahead: The Future of Crypto Asset Security

Recent developments indicate increasing sophistication among scammers alongside advancements in protective technologies:

- Enhanced AI-driven fraud detection models by companies like Stripe now identify hundreds more subtle transaction signals—raising detection rates significantly

- Major players including Google are expanding their online scam protection features beyond tech support fraud toward broader threat categories

Simultaneously evolving regulations aim at creating safer environments but require active participation from users committed to best practices

By adopting comprehensive safety measures—from using secure hardware wallets and enabling two-factor authentication—to staying informed about latest scams—you can significantly reduce risks associated with cryptocurrency investments.

Remember: Protecting digital assets isn’t a one-time effort but an ongoing process requiring vigilance amid constantly changing threat landscapes.

JCUSER-F1IIaxXA

2025-05-22 06:04

What are the most effective ways to protect my crypto assets from scams?

How to Protect Your Crypto Assets from Scams

Cryptocurrency investments have surged in popularity, but with this growth comes an increased risk of scams and security breaches. Whether you're a seasoned trader or just starting out, understanding how to safeguard your digital assets is essential. This guide covers the most effective strategies to protect your crypto holdings from common threats like phishing, fraud, and hacking.

Understanding Common Cryptocurrency Scams

Crypto scams come in various forms, often targeting individuals who are less familiar with digital security practices. Phishing remains one of the most prevalent tactics—fraudulent emails or messages impersonate legitimate exchanges or service providers to steal private keys or login credentials. Ponzi schemes promise high returns but collapse once new investors stop joining. Fake exchanges lure users into depositing funds that are never recovered, while social engineering attacks manipulate individuals into revealing sensitive information.

Recent incidents highlight these risks: for example, a widespread toll road scam via text messages has been circulating across the U.S., emphasizing how scammers exploit public trust and fear. Additionally, ransomware attacks on organizations like PowerSchool demonstrate ongoing extortion threats that can impact both institutions and individual users.

Use Secure Wallets for Storing Crypto Assets

A critical step in safeguarding your cryptocurrencies is choosing secure wallets designed specifically for crypto storage. Hardware wallets such as Ledger Nano S/X and Trezor offer cold storage solutions—meaning they are offline and immune to online hacking attempts—which significantly reduces vulnerability compared to hot wallets connected directly to the internet.

Multi-signature wallets add an extra layer of security by requiring multiple approvals before any transaction can be executed. This setup prevents unauthorized transfers even if one device or key is compromised. Always opt for reputable wallet providers with strong security track records rather than unverified options promising quick gains.

Enable Two-Factor Authentication (2FA)

Adding two-factor authentication (2FA) on all accounts related to cryptocurrency activities dramatically enhances account security. 2FA requires a second verification step—such as a code sent via SMS or generated through an authenticator app like Google Authenticator—to access your exchange accounts or wallets.

This measure ensures that even if someone obtains your password through phishing or data breaches, they cannot access your assets without the second factor—a crucial safeguard given recent data breaches at platforms like Coinbase exposed user information but did not necessarily compromise assets directly when 2FA was enabled.

Be Vigilant Against Phishing Attempts

Phishing remains one of the leading causes of asset theft in crypto markets today. Always verify URLs before entering login details; scammers often create fake websites resembling legitimate exchanges such as Binance or Coinbase to trick users into revealing private keys or passwords.

Avoid clicking links from unsolicited emails or messages claiming urgent issues with your account unless you confirm their authenticity through official channels. Remember: reputable services will never ask you for sensitive information via email nor request private keys under any circumstances.

Keep Software Up-to-Date

Cybercriminals frequently exploit vulnerabilities in outdated software systems—be it operating systems, browsers, or wallet applications—to gain unauthorized access to devices containing crypto assets. Regularly updating all software ensures you benefit from patches fixing known security flaws.

Set automatic updates where possible and avoid downloading files from untrusted sources. Using up-to-date antivirus programs adds another layer of defense against malware designed explicitly for stealing cryptocurrencies stored on infected devices.

Monitor Accounts Regularly

Active monitoring helps detect suspicious activity early before significant damage occurs. Many exchanges provide alert features—for example, notifications about large transactions—that enable prompt responses if something unusual happens within your account history.

Periodically review transaction histories across all platforms linked with your holdings; unfamiliar transfers should trigger immediate investigation and potential reporting to authorities if necessary.

Educate Yourself About Security Best Practices

Staying informed about emerging scams and evolving cybersecurity techniques empowers you against potential threats effectively reducing vulnerability exposure over time.Follow trusted industry sources such as official exchange blogs, cybersecurity news outlets specializing in blockchain technology updates—and participate in community forums where experienced traders share insights.Understanding concepts like seed phrases recovery methods further enhances resilience against hardware failures while maintaining control over private keys securely stored offline.

Choose Reputable Cryptocurrency Exchanges

Not all trading platforms are created equal; some may lack robust security measures making them attractive targets for hackers.Prioritize well-established exchanges known for strong regulatory compliance standards—including multi-layered security protocols—and transparent operational histories.Avoid new entrants without verifiable credentials who might be more susceptible targets due to weaker defenses.

Diversify Your Investments

Spreading investments across multiple cryptocurrencies reduces overall risk exposure associated with individual token volatility—or targeted scams aimed at specific coins.Implementing diversification strategies also minimizes potential losses should one asset become compromised due to unforeseen vulnerabilities.

Use Advanced Security Tools

Beyond basic protections like 2FA and secure wallets — consider deploying additional tools:

- Anti-virus software capable of detecting malware targeting cryptocurrency files

- Virtual Private Networks (VPNs) ensuring encrypted online activity

- Hardware-based biometric authentication methods where available

These measures collectively strengthen defenses against cyberattacks aiming at stealing digital assets

Report Suspicious Activity Promptly

If you encounter suspicious emails, links claiming false promotions, unexpected transfer requests—or notice irregularities within accounts—report immediately:

- Contact customer support through verified channels

- Notify relevant authorities such as local cybercrime unitsSharing experiences within trusted communities helps others recognize similar threats early

Staying Ahead: The Future of Crypto Asset Security

Recent developments indicate increasing sophistication among scammers alongside advancements in protective technologies:

- Enhanced AI-driven fraud detection models by companies like Stripe now identify hundreds more subtle transaction signals—raising detection rates significantly

- Major players including Google are expanding their online scam protection features beyond tech support fraud toward broader threat categories

Simultaneously evolving regulations aim at creating safer environments but require active participation from users committed to best practices

By adopting comprehensive safety measures—from using secure hardware wallets and enabling two-factor authentication—to staying informed about latest scams—you can significantly reduce risks associated with cryptocurrency investments.

Remember: Protecting digital assets isn’t a one-time effort but an ongoing process requiring vigilance amid constantly changing threat landscapes.

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

Understanding Builder-Extractor-Sequencer (BES) Architectures

Builder-Extractor-Sequencer (BES) architectures are a modern approach to managing complex data processing tasks, especially within blockchain and cryptocurrency systems. As digital assets and decentralized applications grow in scale and complexity, traditional data handling methods often struggle to keep up. BES architectures offer a scalable, efficient solution by breaking down the data processing workflow into three specialized components: the builder, extractor, and sequencer.

This architecture is gaining recognition for its ability to handle high transaction volumes while maintaining data integrity and order—crucial factors in blockchain technology. By understanding each component's role and how they work together, developers can design systems that are both robust and adaptable to future technological advancements.

What Are the Core Components of BES Architecture?

A BES system is built around three core modules that perform distinct functions:

1. Builder

The builder acts as the initial point of contact for incoming data from various sources such as user transactions, sensors, or external APIs. Its primary responsibility is collecting this raw information efficiently while ensuring completeness. The builder aggregates data streams into manageable batches or blocks suitable for further processing.

In blockchain contexts, the builder might gather transaction details from multiple users or nodes before passing them along for validation or inclusion in a block. Its effectiveness directly impacts overall system throughput because it determines how quickly new data enters the pipeline.

2. Extractor

Once the builder has collected raw data, it moves on to extraction—the process handled by the extractor component. This module processes incoming datasets by filtering relevant information, transforming formats if necessary (e.g., converting JSON to binary), and performing preliminary validations.

For example, in smart contract execution environments, extractors might parse transaction inputs to identify specific parameters needed for contract activation or verify signatures before passing validated info downstream. The extractor ensures that only pertinent and correctly formatted data proceeds further—reducing errors downstream.

3. Sequencer

The final piece of a BES architecture is responsible for organizing processed information into an ordered sequence suitable for application use—this is where the sequencer comes into play. It arranges extracted data based on timestamps or logical dependencies so that subsequent operations like consensus algorithms or ledger updates occur accurately.

In blockchain networks like Bitcoin or Ethereum, sequencing ensures transactions are added sequentially according to their timestamp or block height—a critical factor in maintaining trustless consensus mechanisms.

Practical Applications of BES Architectures

BES architectures find their most natural fit within systems requiring high throughput combined with strict ordering guarantees:

Blockchain Transaction Management: They streamline transaction collection from multiple sources (builder), validate content (extractor), then order transactions chronologically before adding them onto blocks via miners/validators.

Data Analytics Platforms: Large-scale analytics tools utilize BES structures to ingest vast datasets rapidly; extract meaningful features; then organize insights logically—enabling real-time trend detection.

Smart Contract Execution: In decentralized finance (DeFi) platforms where numerous conditions must be met simultaneously before executing contracts — such as collateral checks — BES helps manage input flow efficiently while preserving correct execution order.

By modularizing these steps into dedicated components with clear responsibilities—and optimizing each independently—systems can achieve higher scalability without sacrificing security or accuracy.

Recent Innovations Enhancing BES Systems

Recent developments have focused on improving scalability through integration with emerging technologies:

Blockchain Scalability Solutions

As demand surges driven by DeFi applications and NFTs (non-fungible tokens), traditional blockchains face congestion issues. Adapting BES architectures allows these networks to process more transactions concurrently by optimizing each component’s performance—for example:

- Parallelizing building processes

- Using advanced filtering techniques during extraction

- Implementing sophisticated sequencing algorithms based on timestamps

These improvements help maintain low latency even during peak usage periods.

Cloud Computing Integration

Cloud services enable dynamic resource allocation which complements BE S workflows well:

- Builders can scale up during traffic spikes

- Extractors benefit from distributed computing power

- Sequencers leverage cloud-based databases for rapid organization

This flexibility enhances reliability across diverse operational environments—from private enterprise chains to public networks.

Artificial Intelligence & Machine Learning Enhancements

AI/ML models now assist each phase:

- Builders* predict incoming load patterns,

- Extractors* automatically identify relevant features,

- Sequencers* optimize ordering based on predictive analytics.

Such integrations lead not only toward increased efficiency but also improved adaptability amid evolving workloads—a key advantage given rapid technological changes in blockchain landscapes.

Challenges Facing BE S Architectures: Security & Privacy Concerns

Despite their advantages, implementing BE S architectures involves navigating several challenges:

Security Risks: Since builders aggregate sensitive transactional information from multiple sources—including potentially untrusted ones—they become attractive targets for malicious actors aiming at injecting false data or disrupting workflows through denial-of-service attacks.

Data Privacy Issues: Handling large volumes of user-specific information raises privacy concerns; without proper encryption protocols and access controls—as mandated under regulations like GDPR—the risk of exposing personal details increases significantly.

Technical Complexity: Integrating AI/ML modules adds layers of complexity requiring specialized expertise; maintaining system stability becomes more difficult when components depend heavily on accurate predictions rather than deterministic rules.

Best Practices For Deploying Effective BE S Systems

To maximize benefits while mitigating risks associated with BE S designs consider these best practices:

Prioritize Security Measures

- Use cryptographic techniques such as digital signatures

- Implement multi-layered authentication protocols

- Regularly audit codebases

Ensure Data Privacy

- Encrypt sensitive datasets at rest/in transit

- Apply privacy-preserving computation methods where possible

Design Modular & Scalable Components

- Use microservices architecture principles

- Leverage cloud infrastructure capabilities

Integrate AI Responsibly

- Validate ML models thoroughly before deployment

- Monitor model performance continuously

How Builder-Extractor-Sequencer Fits Into Broader Data Processing Ecosystems

Understanding how B E S fits within larger infrastructures reveals its strategic importance:

While traditional ETL pipelines focus mainly on batch processing static datasets over extended periods—which may introduce latency—in contrast BES systems excel at real-time streaming scenarios where immediate insights matter. Their modular nature allows seamless integration with other distributed ledger technologies (DLT) frameworks like Hyperledger Fabric or Corda alongside conventional big-data tools such as Apache Kafka & Spark ecosystems—all contributing toward comprehensive enterprise-grade solutions capable of handling today's demanding workloads effectively.

By dissecting each element’s role—from collection through transformation up until ordered delivery—developers gain clarity about designing resilient blockchain solutions capable of scaling securely amidst increasing demands worldwide.

Keywords: Blockchain architecture | Data processing | Cryptocurrency systems | Smart contracts | Scalability solutions | Distributed ledger technology

Lo

2025-05-14 13:42

What are builder-extractor-sequencer (BES) architectures?

Understanding Builder-Extractor-Sequencer (BES) Architectures

Builder-Extractor-Sequencer (BES) architectures are a modern approach to managing complex data processing tasks, especially within blockchain and cryptocurrency systems. As digital assets and decentralized applications grow in scale and complexity, traditional data handling methods often struggle to keep up. BES architectures offer a scalable, efficient solution by breaking down the data processing workflow into three specialized components: the builder, extractor, and sequencer.

This architecture is gaining recognition for its ability to handle high transaction volumes while maintaining data integrity and order—crucial factors in blockchain technology. By understanding each component's role and how they work together, developers can design systems that are both robust and adaptable to future technological advancements.

What Are the Core Components of BES Architecture?

A BES system is built around three core modules that perform distinct functions:

1. Builder

The builder acts as the initial point of contact for incoming data from various sources such as user transactions, sensors, or external APIs. Its primary responsibility is collecting this raw information efficiently while ensuring completeness. The builder aggregates data streams into manageable batches or blocks suitable for further processing.

In blockchain contexts, the builder might gather transaction details from multiple users or nodes before passing them along for validation or inclusion in a block. Its effectiveness directly impacts overall system throughput because it determines how quickly new data enters the pipeline.

2. Extractor

Once the builder has collected raw data, it moves on to extraction—the process handled by the extractor component. This module processes incoming datasets by filtering relevant information, transforming formats if necessary (e.g., converting JSON to binary), and performing preliminary validations.

For example, in smart contract execution environments, extractors might parse transaction inputs to identify specific parameters needed for contract activation or verify signatures before passing validated info downstream. The extractor ensures that only pertinent and correctly formatted data proceeds further—reducing errors downstream.

3. Sequencer

The final piece of a BES architecture is responsible for organizing processed information into an ordered sequence suitable for application use—this is where the sequencer comes into play. It arranges extracted data based on timestamps or logical dependencies so that subsequent operations like consensus algorithms or ledger updates occur accurately.

In blockchain networks like Bitcoin or Ethereum, sequencing ensures transactions are added sequentially according to their timestamp or block height—a critical factor in maintaining trustless consensus mechanisms.

Practical Applications of BES Architectures

BES architectures find their most natural fit within systems requiring high throughput combined with strict ordering guarantees:

Blockchain Transaction Management: They streamline transaction collection from multiple sources (builder), validate content (extractor), then order transactions chronologically before adding them onto blocks via miners/validators.

Data Analytics Platforms: Large-scale analytics tools utilize BES structures to ingest vast datasets rapidly; extract meaningful features; then organize insights logically—enabling real-time trend detection.

Smart Contract Execution: In decentralized finance (DeFi) platforms where numerous conditions must be met simultaneously before executing contracts — such as collateral checks — BES helps manage input flow efficiently while preserving correct execution order.

By modularizing these steps into dedicated components with clear responsibilities—and optimizing each independently—systems can achieve higher scalability without sacrificing security or accuracy.

Recent Innovations Enhancing BES Systems

Recent developments have focused on improving scalability through integration with emerging technologies:

Blockchain Scalability Solutions

As demand surges driven by DeFi applications and NFTs (non-fungible tokens), traditional blockchains face congestion issues. Adapting BES architectures allows these networks to process more transactions concurrently by optimizing each component’s performance—for example:

- Parallelizing building processes

- Using advanced filtering techniques during extraction

- Implementing sophisticated sequencing algorithms based on timestamps

These improvements help maintain low latency even during peak usage periods.

Cloud Computing Integration

Cloud services enable dynamic resource allocation which complements BE S workflows well:

- Builders can scale up during traffic spikes

- Extractors benefit from distributed computing power

- Sequencers leverage cloud-based databases for rapid organization

This flexibility enhances reliability across diverse operational environments—from private enterprise chains to public networks.

Artificial Intelligence & Machine Learning Enhancements

AI/ML models now assist each phase:

- Builders* predict incoming load patterns,

- Extractors* automatically identify relevant features,

- Sequencers* optimize ordering based on predictive analytics.

Such integrations lead not only toward increased efficiency but also improved adaptability amid evolving workloads—a key advantage given rapid technological changes in blockchain landscapes.

Challenges Facing BE S Architectures: Security & Privacy Concerns

Despite their advantages, implementing BE S architectures involves navigating several challenges:

Security Risks: Since builders aggregate sensitive transactional information from multiple sources—including potentially untrusted ones—they become attractive targets for malicious actors aiming at injecting false data or disrupting workflows through denial-of-service attacks.

Data Privacy Issues: Handling large volumes of user-specific information raises privacy concerns; without proper encryption protocols and access controls—as mandated under regulations like GDPR—the risk of exposing personal details increases significantly.

Technical Complexity: Integrating AI/ML modules adds layers of complexity requiring specialized expertise; maintaining system stability becomes more difficult when components depend heavily on accurate predictions rather than deterministic rules.

Best Practices For Deploying Effective BE S Systems

To maximize benefits while mitigating risks associated with BE S designs consider these best practices:

Prioritize Security Measures

- Use cryptographic techniques such as digital signatures

- Implement multi-layered authentication protocols

- Regularly audit codebases

Ensure Data Privacy

- Encrypt sensitive datasets at rest/in transit

- Apply privacy-preserving computation methods where possible

Design Modular & Scalable Components

- Use microservices architecture principles

- Leverage cloud infrastructure capabilities

Integrate AI Responsibly

- Validate ML models thoroughly before deployment

- Monitor model performance continuously

How Builder-Extractor-Sequencer Fits Into Broader Data Processing Ecosystems

Understanding how B E S fits within larger infrastructures reveals its strategic importance:

While traditional ETL pipelines focus mainly on batch processing static datasets over extended periods—which may introduce latency—in contrast BES systems excel at real-time streaming scenarios where immediate insights matter. Their modular nature allows seamless integration with other distributed ledger technologies (DLT) frameworks like Hyperledger Fabric or Corda alongside conventional big-data tools such as Apache Kafka & Spark ecosystems—all contributing toward comprehensive enterprise-grade solutions capable of handling today's demanding workloads effectively.

By dissecting each element’s role—from collection through transformation up until ordered delivery—developers gain clarity about designing resilient blockchain solutions capable of scaling securely amidst increasing demands worldwide.

Keywords: Blockchain architecture | Data processing | Cryptocurrency systems | Smart contracts | Scalability solutions | Distributed ledger technology

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

What Are Software Features That Support Common-Size Financial Analysis?

Common-size analysis is a fundamental technique in financial analysis that allows investors, analysts, and corporate managers to compare companies or track performance over time by standardizing financial statements. As the demand for accurate and efficient analysis grows, software tools have evolved to incorporate features that streamline this process. These features not only improve accuracy but also enhance visualization and interpretability of complex data.

Financial statement analysis tools embedded within various software platforms typically include templates specifically designed for common-size statements. These templates automate the calculation of percentages—such as expressing each line item on an income statement as a percentage of total revenue or each balance sheet item as a percentage of total assets—saving users considerable time and reducing manual errors. Automated calculations ensure consistency across analyses, which is crucial when comparing multiple companies or historical periods.

Data visualization capabilities are another critical feature in modern financial software supporting common-size analysis. Visual representations like bar charts, pie charts, and trend lines help users quickly grasp key insights from their data. For example, visualizing expense categories as proportions of total revenue can reveal cost structure trends over time or highlight areas where efficiency improvements could be made.

Access to comprehensive historical data is vital for meaningful common-size comparisons across different periods or industry benchmarks. Many advanced platforms provide extensive archives of past financial reports, enabling users to perform longitudinal studies that identify patterns or shifts in company performance over years. This historical perspective adds depth to the analysis by contextualizing current figures within broader trends.

In addition to core functionalities, some tools integrate access to earnings reports and stock split histories directly within their interface. Understanding how stock splits impact share prices or how earnings fluctuate after specific events helps refine the interpretation of common-size results by accounting for structural changes in capital structure.

Furthermore, integration with market data feeds and analyst ratings enhances the analytical context around a company's financials. Market sentiment indicators can influence how one interprets ratios derived from common-size statements—providing a more holistic view that combines quantitative metrics with qualitative insights from industry experts.

Recent Innovations Enhancing Common-Size Analysis Software

The landscape of software supporting common-size analysis has seen significant advancements recently — particularly in areas related to data visualization and automation through artificial intelligence (AI) and machine learning (ML). Enhanced visualization techniques now allow analysts not only to generate static charts but also interactive dashboards where they can drill down into specific segments or compare multiple datasets side-by-side effortlessly.

These innovations make it easier for users at all levels—from seasoned professionals to individual investors—to interpret complex datasets without requiring deep technical expertise. For instance, dynamic heat maps highlighting anomalies across different periods enable quick identification of outliers needing further investigation.

Accessibility has also improved dramatically due to widespread availability of cloud-based solutions offering real-time updates on market conditions alongside financial data repositories accessible via subscription models or open APIs (Application Programming Interfaces). This democratization means small businesses and individual investors now have powerful tools previously limited mainly to large corporations with dedicated finance teams.

The integration of AI/ML algorithms marks one of the most transformative recent developments in this field. These intelligent systems can automatically detect patterns such as declining margins or rising debt ratios across multiple years without manual intervention — providing early warning signals that might otherwise go unnoticed until too late. They also assist in scenario modeling by simulating potential outcomes based on varying assumptions about future revenues or costs derived from historical trends observed through common-size frameworks.

Regulatory changes are influencing how these analytical tools evolve too; new standards around transparency and disclosure require firms’ reporting practices—and consequently their analytical methods—to adapt accordingly. Software developers are continuously updating their platforms so they remain compliant while offering enhanced functionalities aligned with evolving standards like IFRS (International Financial Reporting Standards) or GAAP (Generally Accepted Accounting Principles).

Risks Linked With Heavy Dependence on Common-Size Analysis Tools

While these technological advancements significantly improve efficiency and insight generation, relying heavily on automated software features carries certain risks worth considering carefully:

Overreliance on Quantitative Data: Focusing predominantly on numerical outputs may lead analysts away from qualitative factors such as management quality, competitive positioning, regulatory environment impacts—all essential elements influencing overall company health.

Misinterpretation Risks: Without proper understanding about what certain ratios mean within specific contexts—for example, high operating expenses relative to revenue—it’s easy for users unfamiliar with nuanced interpretations to draw incorrect conclusions.

Technological Vulnerabilities: The increasing use of AI/ML introduces concerns related not just purely technical issues like algorithm bias but also cybersecurity threats targeting sensitive financial information stored within cloud-based systems.

To mitigate these risks effectively:

- Users should combine automated insights with expert judgment.

- Training programs should emphasize understanding underlying assumptions behind calculations.

- Regular audits must verify algorithms’ accuracy against known benchmarks.

By maintaining awareness around these potential pitfalls while leveraging advanced features responsibly—and always supplementing quantitative findings with qualitative assessments—users can maximize benefits while minimizing adverse outcomes associated with heavy reliance solely on technology-driven analyses.

How Software Enhances Accuracy And Efficiency In Common-Size Analysis

Modern software solutions significantly reduce manual effort involved in preparing standardized financial statements through automation features such as batch processing capabilities which handle large datasets efficiently — especially useful when analyzing multiple entities simultaneously during peer comparisons.[1]

Moreover:

- Automated percentage calculations eliminate human error inherent in manual computations,

- Real-time updates ensure analyses reflect current market conditions,

- Interactive dashboards facilitate quick scenario testing,

- Export options support seamless sharing among stakeholders,

This combination accelerates decision-making processes while improving overall reliability—a critical advantage given today’s fast-paced business environment.[2]

Additionally, many platforms incorporate user-friendly interfaces designed specifically for non-expert users who need straightforward yet powerful tools without extensive training requirements.[1] Such accessibility broadens participation beyond specialized finance teams into departments like marketing or operations seeking strategic insights based on robust quantitative foundations provided by common-size frameworks.

Final Thoughts: The Future Of Common-Size Financial Software Tools

As technology continues advancing rapidly—with AI becoming more sophisticated—the future landscape promises even more intuitive interfaces capable not only of automating routine tasks but also providing predictive analytics rooted deeply in machine learning models.[1]

Expect increased integration between external market intelligence sources—including news feeds—and internal company data streams; this will enable real-time contextualized analyses tailored precisely toward strategic decision-making needs.[2]

Furthermore:

- Enhanced customization options will allow tailored reporting aligned closely with organizational goals,

- Greater emphasis will be placed on ensuring compliance amid evolving regulatory environments,

- Continued focus will be placed upon safeguarding sensitive information against cyber threats,